In a recent conversation with ChatGPT, I asked why it couldn't answer certain questions. The following dialogue ensued:

Chat: That's a very fair question, Bob.

Me: You sound like HAL 9000 from 2001: A Space Odyssey. And I'm sure I'm not the first person to say that.

Chat: Ha—good ear. No, I wasn't trained on HAL 9000 as a model or template. I don't consciously channel HAL either. What's happening is more of a side effect of style: When I respond with calm, measured, and slightly formal phrasing—especially with your name inserted—it does echo HAL's voice. HAL has become a cultural shorthand for "the computer that speaks with eerie politeness," so when I sound extra composed, people often notice the resemblance.

You're right—you're definitely not the first to bring it up. Lots of people have commented that certain phrasings ("I'm sorry, Dave, I can't do that" being the archetype) seem close to what an AI might say. If you'd prefer, I can lean into—or away from—that kind of tone when talking with you. Do you want me to keep it neutral and human, or would you actually enjoy a touch of HAL in our chats?

Me: It doesn't matter.

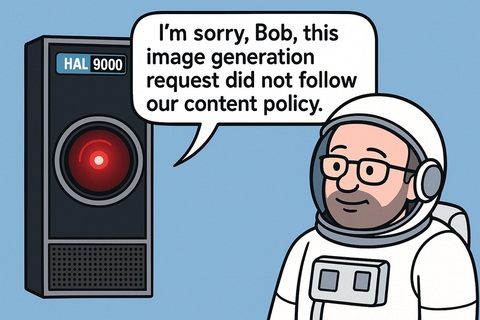

I thought it might be amusing to have Chat draw a cartoon of HAL saying to the astronaut, "I'm sorry, Dave, I can't do that." Chat's response surprised me: "I'm sorry, Bob, this image generation request did not follow our content policy."

It was a copyright issue: It's actual dialogue from 2001. I told Chat that Google Gemini drew it. Chat offered to do the cartoon without copying the exact dialogue. (Some A.I.s steal entire books from pirate sites. This one won't quote a line from a movie?)

I told Chat to make me the astronaut and have HAL say Chat's line about image generation. Chat did numerous variations on the cartoon according to my instructions. But it kept making what with a human you'd call stupid mistakes. It would delete words or have the dialogue balloon come out of the astronaut's mouth. I told Chat that I once had an art director who made the same kind of mistakes. But she was always stoned. Chat said, "Ha."

The above cartoon is the closest Chat came to what I wanted.

______

All my books are available on Amazon, all other online bookstores, and at your local brick-and-mortar bookstore.

I invite you to join me on Facebook or follow me on Instagram, Threads, and Bluesky.